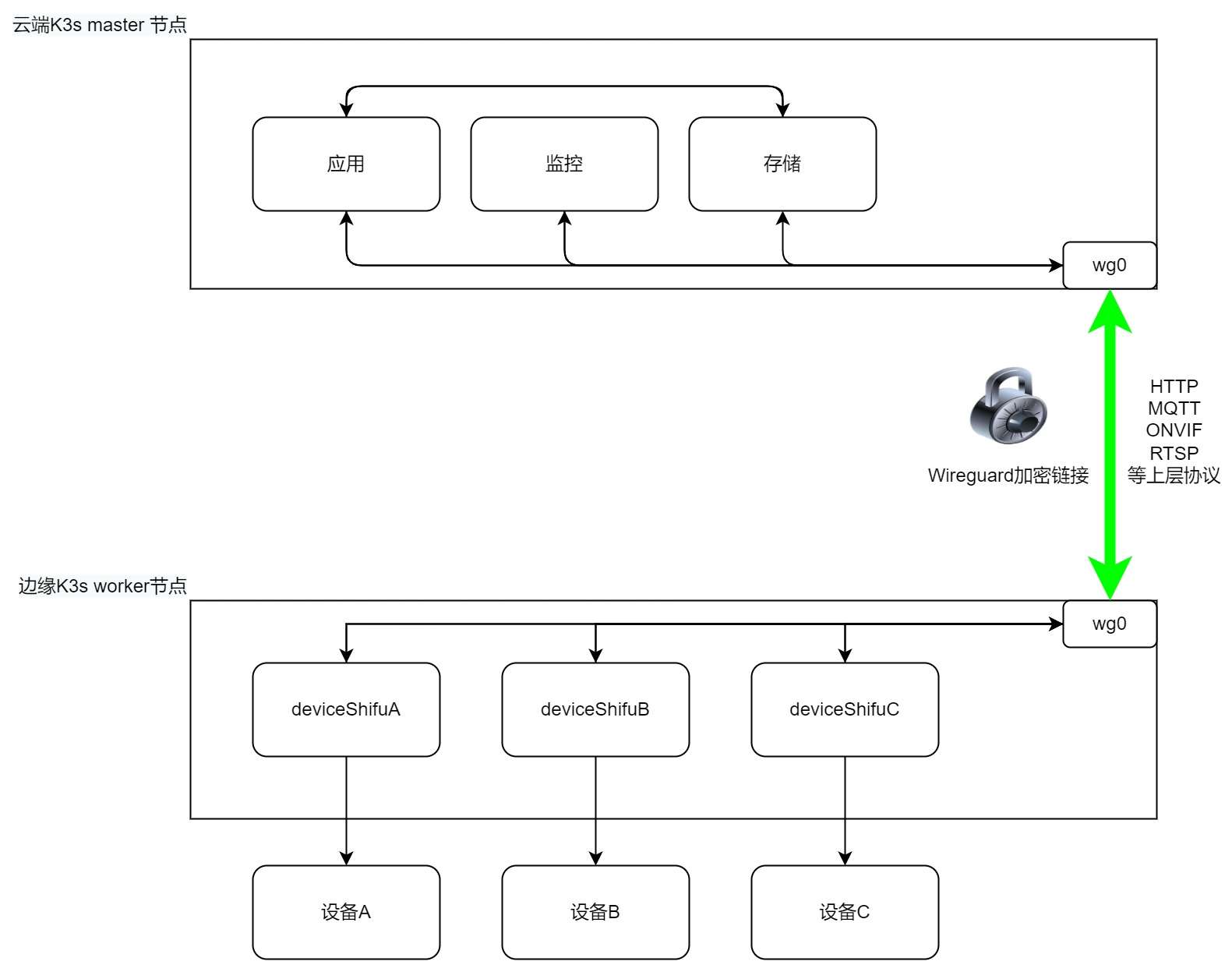

背景

K3s 是SUSE基于Kubernetes做的一款开源的轻量发行版。它可以在边缘端算力受限制的场景中运行,非常适合边缘端有IoT设备的场景。 Shifu作为Kubernetes原生的开源物联网开发框架,利用分布式的思维将每一个IoT设备进行结构性的虚拟化,并将他们的能力通过Kubernetes服务的方式开放给上层应用。

总体架构图

实施指南

必备条件

软件:

| 名称 | 版本 |

|---|---|

| K3s | v1.24.4+k3s1 |

| Wireguard | v1.0.20200513 |

硬件:

| 名称 | 架构 | CPU | 内存 | 硬盘 | 网络 |

|---|---|---|---|---|---|

| master节点 | amd64/arm/arm64 | 至少2个 | 至少2GB | 32GB | 可以联网,有公网IP/可以被worker节点访问到 |

| worker节点 | amd64/arm/arm64 | 至少1个 | 至少2GB | 16GB | 可以联网,可以访问到master节点的IP |

步骤

在服务器端部署wireguard server

a. 利用一件脚本

https://github.com/angristan/wireguard-install

b. 执行以下命令

curl -O https://raw.githubusercontent.com/angristan/wireguard-install/master/wireguard-install.sh

chmod +x wireguard-install.sh

./wireguard-install.shc. 按照命令输入服务器的公网IP,按需添加用户,以下为实际输出,请按需更改:

root@localhost:~# ./wireguard-install.sh

Welcome to the WireGuard installer!

The git repository is available at: https://github.com/angristan/wireguard-install

I need to ask you a few questions before starting the setup.

You can leave the default options and just press enter if you are ok with them.

IPv4 or IPv6 public address: 192.168.0.1 # 这里修改为你的公网IP, 可以通过"curl ip.sb"来获取

Public interface: ens5

WireGuard interface name: wg0

Server's WireGuard IPv4: 10.66.66.1 # wireguard 服务器接口的IPv4地址,如无需求,默认即可

Server's WireGuard IPv6: fd42:42:42::1 # wireguard 服务器接口的IPv6地址,如无需求,默认即可

Server's WireGuard port [1-65535]: 64191 # 这里修改为你的端口,开启端口后需要在主机的防火墙开始允许UDP

First DNS resolver to use for the clients: 114.114.114.114

Second DNS resolver to use for the clients (optional): 119.29.29.29

Okay, that was all I needed. We are ready to setup your WireGuard server now.

.................................

这里输出省略

.................................

Tell me a name for the client.

The name must consist of alphanumeric character. It may also include an underscore or a dash and can't exceed 15 chars.

Client name: client1 # 安装完毕提示输入用户名,自定义即可

Client's WireGuard IPv4: 10.66.66.2 # wireguard 客户端接口的IPv4地址,如无需求,默认即可

Client's WireGuard IPv6: fd42:42:42::2 # wireguard 客户端接口的IPv6地址,如无需求,默认即可

.................................

这里输出省略

.................................

It is also available in /home/ubuntu/wg0-client-client1.conf # 生成给worker节点的配置文件d. 请将脚本最后生成的配置文件

/home/ubuntu/wg0-client-client1.conf保存下来,之后会放到worker节点上 e. 脚本运行完成后接口添加完毕, 可以通过wg show all来查看状态:root@localhost:~# wg show all

interface: wg0

public key: adsdadhkaskdhadkjhs12312kl3j1l2o

private key: (hidden)

listening port: 64191

peer: adsdadhkaskdhadkjhs12312kl3j1l2odsada2

preshared key: (hidden)

allowed ips: 10.66.66.2/32, fd42:42:42::2/128f. 至此,服务器端配置完毕,如果需要生成更多客户端只需再次执行

./wireguard-install.sh按需添加即可在服务器部署K3s server

a. 完成步骤1后,我们可以在服务器端部署K3s,利用wireguard的接口,命令如下:

curl -sfL https://rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn K3S_TOKEN=token INSTALL_K3S_EXEC="--advertise-address=10.66.66.1 --flannel-iface=wg0" sh -b. 其中的配置项:

K3S_TOKEN=token,这里的token按需更换,但是worker节点加入的时候需要一致INSTALL_K3S_EXEC="--advertise-address=10.66.66.1 ---flannel-iface=wg0",这里我们配置了两项--advertise-address=10.66.66.1,以wireguard的接口来作为连接的IP,而不是服务器IP--flannel-iface=wg0,告诉K3s的flannel组件使用wg0接口

c. 命令执行后的输出参考:

[INFO] Finding release for channel stable

[INFO] Using v1.24.4+k3s1 as release

[INFO] Downloading hash rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/v1.24.4-k3s1/sha256sum-amd64.txt

[INFO] Downloading binary rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/v1.24.4-k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

root@localhost:~#d. 没有问题可以尝试执行

kubectl get pods -A, 所有Pod是running的状态即可~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-b96499967-hs6bn 1/1 Running 0 4m14s

kube-system local-path-provisioner-7b7dc8d6f5-8szzd 1/1 Running 0 4m14s

kube-system helm-install-traefik-crd-9bhdp 0/1 Completed 0 4m14s

kube-system helm-install-traefik-h5q4h 0/1 Completed 1 4m14s

kube-system metrics-server-668d979685-tlvzc 1/1 Running 0 4m14s

kube-system svclb-traefik-99c87d41-cqcnb 2/2 Running 0 3m49s

kube-system traefik-7cd4fcff68-b6cjj 1/1 Running 0 3m49se. 查看master节点状态

kubectl get nodes#kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-31-37-138 Ready control-plane,master 8m35s v1.24.4+k3s1f. 至此,服务器端K3s部署完毕

在worker节点配置wireguard

提示本教程中使用的是一个运行在ARM64上的

Ubuntu 20.04.5 LTS的server来进行演示a. 更新软件列表,安装

resolvconf和wireguardapt-get update && apt-get install resolvconf wireguard -yb. 将以下配置放到

/etc/wireguard/wg0.conf备注配置文件中最后一行

AllowedIPs默认是0.0.0.0/0,::/0,需要将其修改为服务器wireguard的IP段,也就是10.66.66.0/24[Interface]

PrivateKey = casasdlaijo()(hjdsasdasdihasddad

Address = 10.66.66.2/32,fd42:42:42::2/128

DNS = 114.114.114.114,119.29.29.29

[Peer]

PublicKey = asdasd21edawd3resaedserw3rawd

PresharedKey = dasda23e134e3edwadw3reqwda

Endpoint = 192.168.0.1:64191 # 这里应该是服务器的gongwangIP以及开放的UDP端口

AllowedIPs = 10.66.66.0/24 # 注意,这里默认是0.0.0.0/0,需要修改c. 执行以下命令,拉起wg0接口

wg-quick up /etc/wireguard/wg0.confd. 测试接口是否通,通过

ping 10.66.66.1, 可以Ping通即生效root@k3s:~# ping 10.66.66.1

PING 10.66.66.1 (10.66.66.1) 56(84) bytes of data.

64 bytes from 10.66.66.1: icmp_seq=1 ttl=64 time=12.9 ms

64 bytes from 10.66.66.1: icmp_seq=2 ttl=64 time=13.1 ms

64 bytes from 10.66.66.1: icmp_seq=3 ttl=64 time=18.9 ms

64 bytes from 10.66.66.1: icmp_seq=4 ttl=64 time=8.21 ms

64 bytes from 10.66.66.1: icmp_seq=5 ttl=64 time=13.3 ms

64 bytes from 10.66.66.1: icmp_seq=6 ttl=64 time=7.66 ms

^C

--- 10.66.66.1 ping statistics ---

6 packets transmitted, 6 received, 0% packet loss, time 5316ms

rtt min/avg/max/mdev = 7.659/12.345/18.863/3.729 ms在worker节点配置K3s agent

a. 安装k3s,加入集群

curl -sfL https://rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/k3s-install.sh | INSTALL_K3S_MIRROR=cn K3S_TOKEN=token K3S_URL=https://10.66.66.1:6443 INSTALL_K3S_EXEC="--node-ip=10.66.66.3 --flannel-iface=wg0" sh -b. 其中的配置项:

K3S_TOKEN=token,这里的token需要更改为服务器创建时填写的tokenINSTALL_K3S_EXEC="--advertise-address=10.66.66.3 --flannel-iface=wg0",这里我们配置了三项:K3S_URL=https://10.66.66.1:6443,主节点的IP,这里是10.66.66.1--advertise-address=10.66.66.3,以wireguard的接口来作为连接的IP,而不是worker IP--flannel-iface=wg0,告诉K3s的flannel组件使用wg0接口

c. 执行成功后的输出如下:

[INFO] Finding release for channel stable

[INFO] Using v1.24.4+k3s1 as release

[INFO] Downloading hash rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/v1.24.4-k3s1/sha256sum-arm64.txt

[INFO] Downloading binary rancher-mirror.oss-cn-beijing.aliyuncs.com/k3s/v1.24.4-k3s1/k3s-arm64

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

root@k3s:~#d. 在服务器端可以通过

kubectl get nodes验证是否加入成功,显示节点ready即可#kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-172-31-37-138 Ready control-plane,master 24m v1.24.4+k3s1

k3s Ready <none> 2m52s v1.24.4+k3s1利用云边协同部署Shifu

a. 克隆Shifu:

git clone https://gitee.com/edgenesis/shifu.git修改controller里面的镜像(国内可能拉不下来)

vim shifu/pkg/k8s/crd/install/shifu_install.yml将428行改为:

image: bitnami/kube-rbac-proxy:latestb. 安装Shifu:

kubectl apply -f shifu/pkg/k8s/crd/install/shifu_install.ymlc. 将k3s的worker节点打上标记:

kubectl label nodes k3s type=workerd. 尝试将Pod运行在指定节点上,比如一个

nginxPodkubectl run nginx --image=nginx -n deviceshifu --overrides='{"spec": { "nodeSelector": {"type": "worker"}}}'e. 再通过 kubectl get pods -n deviceshifu -owide , 可以看到我们成功将Pod运行在了边缘节点

k3s上#kubectl get pods -n deviceshifu -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx 1/1 Running 0 42s 10.42.1.3 k3s部署一个海康威视摄像头的数字孪生

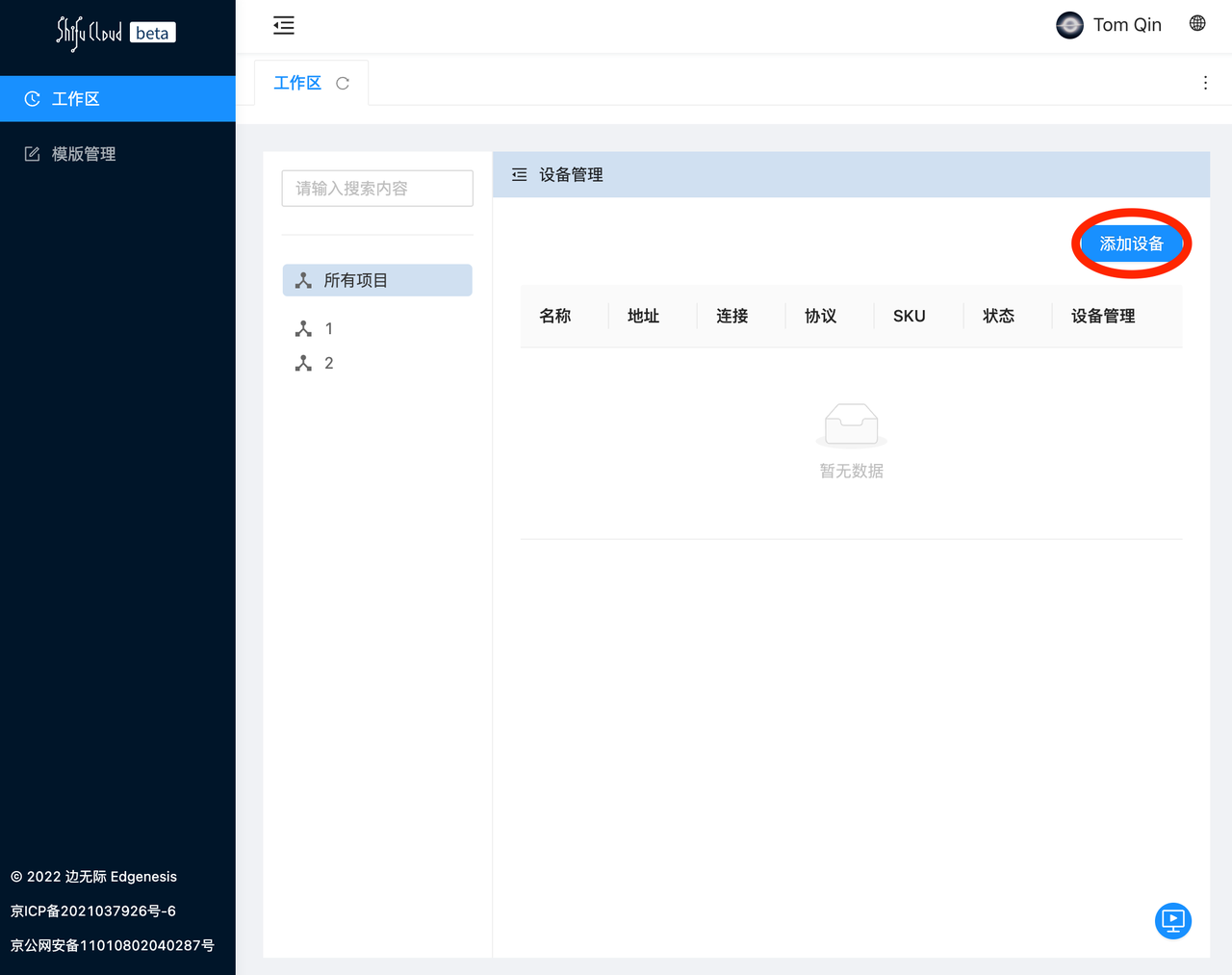

a. 登陆 shifu.cloud

Shifu Cloud 是边无际基于开源物联网开发框架Shifu的一款PaaS产品,它为开发者带来了便携的工具,可以只填写一些设备基本信息来实现物联网设备的接入。在没有Shifu Cloud的情况下需要开发者手动填写一些设备的YAML配置文件,再将虚拟设备进行部署。

b. 点击添加设备

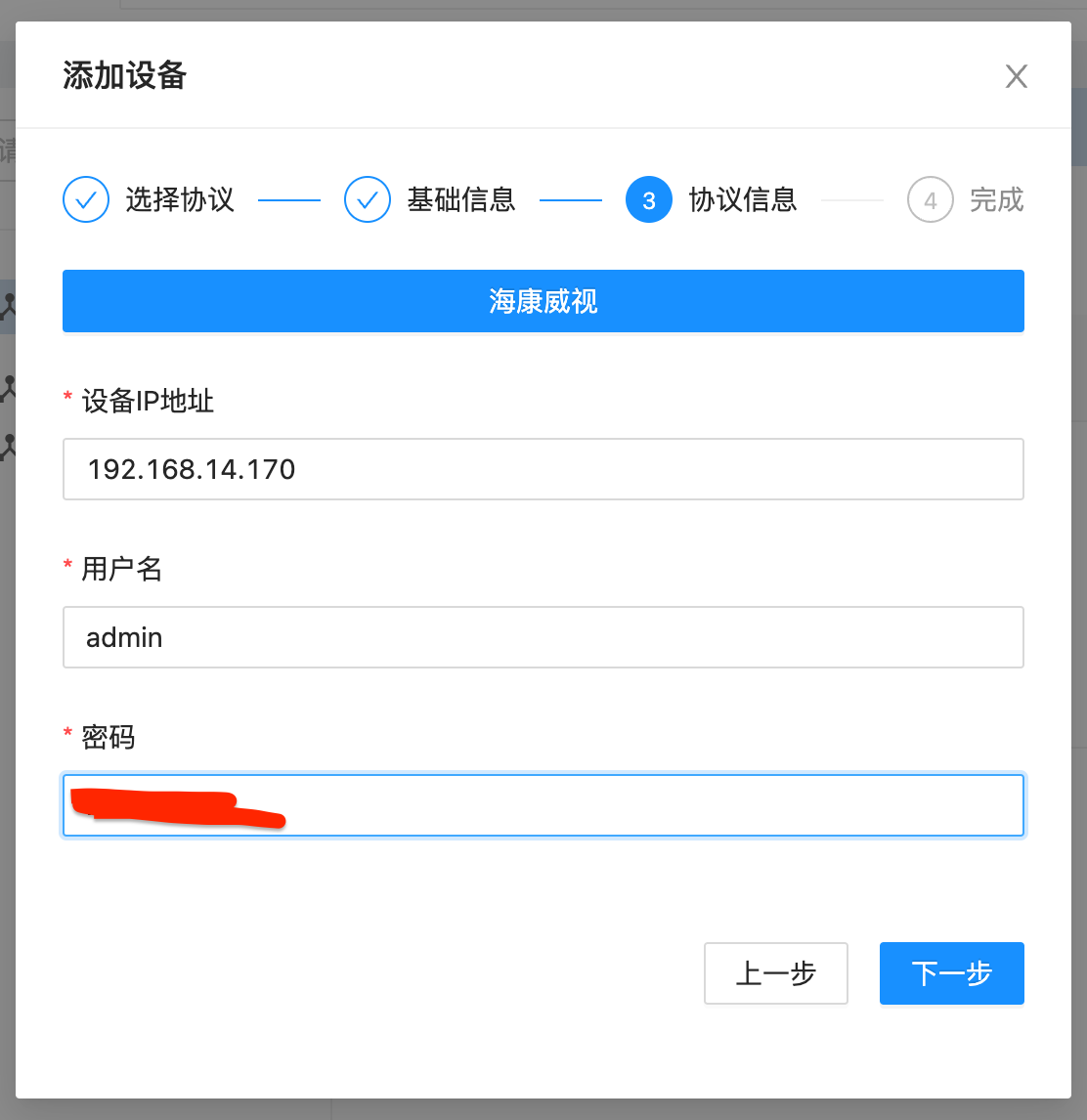

c. 选择私有协议 --> 在下拉菜单中选择海康威视,之后点击下一步

d. 添加设备的基本信息,如设备名,厂商和型号

e. 填写摄像头的IP地址,RTSP用户名以及密码

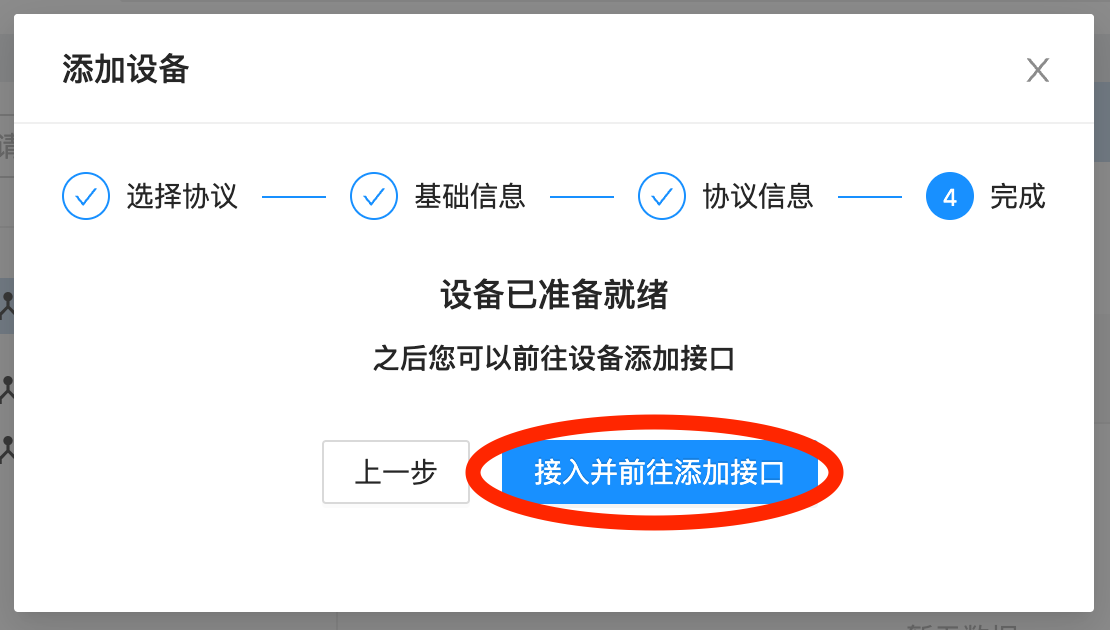

f. 点击接入并前往添加接口

g. 最后点击上传,Shifu Cloud会自动生成 deviceShifu (数字孪生) 的YAML文件

h. 点击复制命令,在K3s的master上执行即可

i. 在命令行复制,输出如下:

root@localhost:~# kubectl apply -f https://******.com/*****/******.yaml

configmap/deviceshifu-devicehikvision-configmap created

service/deviceshifu-devicehikvision-service created

deployment.apps/deviceshifu-devicehikvision-deployment created

edgedevice.shifu.edgenesis.io/edgedevice-devicehikvision created

root@localhost:~#- 背后发生了什么: Shifu Cloud 通过用户填写的一些基本信息,自动生成了设备孪生的YAML文件 (Edge Device,ConfigMap,Deployment和Service),如果您想更深一步的了解请前往 github 中查看具体部署的文件

j. Shifu Cloud 暂不支持加入nodeSelector的功能(敬请期待),默认情况下设备孪生会被部署到master上面,我们需要更新deployment的spec项来让Pod部署在worker节点中

- 我们通过命令获取当前deployment的名字

root@localhost:~# kubectl get deployment -n deviceshifu

NAME READY UP-TO-DATE AVAILABLE AGE

deviceshifu-devicehikvision-deployment 0/1 1 0 16m - 接着通过

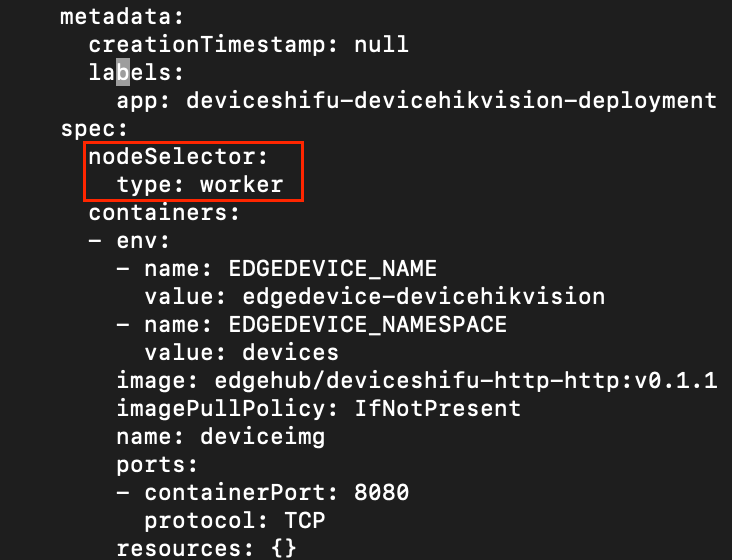

kubectl edit deployment -n deviceshifu deviceshifu-devicehikvision-deployment命令编辑,加入以下两行,保存即可```

......

nodeSelector:

type: worker

......

```

k. 这时我们再次查看,可以看到数字孪生已经被部署到边缘节点 k3s 上

root@localhost:~# kubectl get pods -n deviceshifu -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 165m 10.42.1.4 k3s <none> <none>

deviceshifu-devicehikvision-deployment-5f56fb56d9-2ph5s 2/2 Running 0 21s 10.42.1.6 k3s <none> <none>

运行效果

a. 接下来我们就可以尝试与摄像头进行交互了,让我们在 master 节点上运行一个nginx容器来模拟应用与deviceShifu之间的交互,执行kubectl run nginx-master -n deviceshifu --image=nginx,可以看到 nginx-master 的确运行在 master 节点上:

```

root@localhost:~# kubectl get po -n deviceshifu -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 169m 10.42.1.4 k3s <none> <none>

deviceshifu-devicehikvision-deployment-5f56fb56d9-2ph5s 2/2 Running 0 3m53s 10.42.1.6 k3s <none> <none>

nginx-master 1/1 Running 0 32s 10.42.0.11 localhost <none> <none>

```

b. 我们通过 kubectl exec -it -n deviceshifu nginx -- bash 进入 nginx-master 的命令行,直接与数字孪生交互,获取元信息

root@localhost:~# kubectl exec -it -n deviceshifu nginx -- bash

root@nginx:/# curl deviceshifu-devicehikvision-service/info

<?xml version="1.0" encoding="UTF-8"?>

<DeviceInfo version="2.0" xmlns="http://www.hikvision.com/ver20/XMLSchema">

<deviceName>IP CAMERA</deviceName>

<deviceID>*****</deviceID>

<deviceDescription>IPCamera</deviceDescription>

<deviceLocation>hangzhou</deviceLocation>

<systemContact>Hikvision.China</systemContact>

<model>DS-2DE3Q140CN-W</model>

<serialNumber>DS-*****</serialNumber>

<macAddress>c8:02:8f:c8:86:11</macAddress>

<firmwareVersion>V5.5.800</firmwareVersion>

<firmwareReleasedDate>build 210816</firmwareReleasedDate>

<encoderVersion>V7.3</encoderVersion>

<encoderReleasedDate>build 200601</encoderReleasedDate>

<bootVersion>V1.3.4</bootVersion>

<bootReleasedDate>100316</bootReleasedDate>

<hardwareVersion>0x0</hardwareVersion>

<deviceType>IPCamera</deviceType>

<telecontrolID>88</telecontrolID>

<supportBeep>true</supportBeep>

<supportVideoLoss>false</supportVideoLoss>

<firmwareVersionInfo>B-R-E7-0</firmwareVersionInfo>

</DeviceInfo>

通过以下命令可以直接控制摄像头:

curl deviceshifu-devicehikvision-service/move/{up/down/left/right}

如果我们想查看摄像头当前拍摄以及当前视频流,需要将摄像头的service通过 kubectl port-forward -n deviceshifu service/deviceshifu-devicehikvision-service 30080:80 --address 0.0.0.0 代理到本地

在浏览器中输入服务器的IP加端口号,可以直接查看图像/视频流:

<SERVER_IP>:30080/capture

<SERVER_IP>:30080/stream

总结

在此篇文章中,我们分享了如何让Shifu运行在 K3s 的集群中实现云边协同的控制和数据收集。

在将来的文章中,Shifu Cloud 也会进一步与Kubernetes 进行整合,增加对于边缘节点的部署控制以及自动将设备孪生部署到集群中,无需手动复制。

非常感谢您看到了这里,我们期待您的反馈,如果觉得文章写得不错或者有任何建议请毫不犹豫地留言。